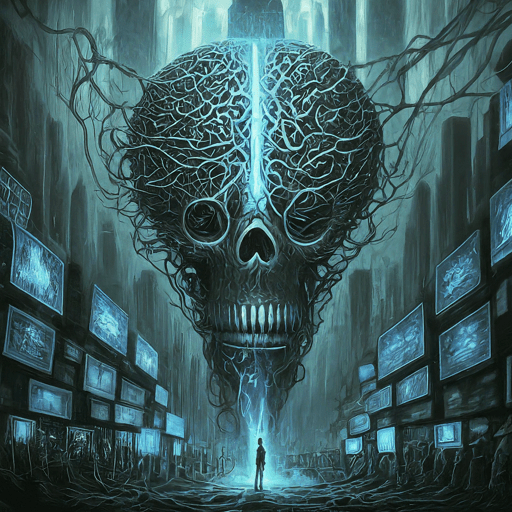

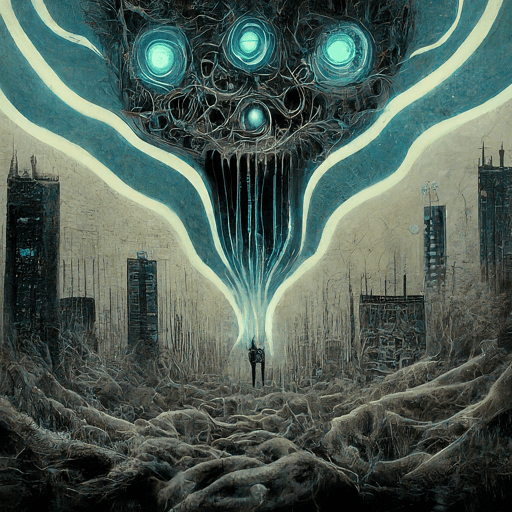

Our minds are being expertly dissected by Algorithms. Man’s Thinking is being taken away from him. Algorithms are fast becoming the Weapon of Choice to modify Us all. You’all, Not Me’all. Algorithm Weaponization can lead to AI’s complete takeover of Mankind.

Who would have ever thought? Oh, wait. They did. We lose again.

Micromanagement of Our Thoughts by Algorithms

Introduction:

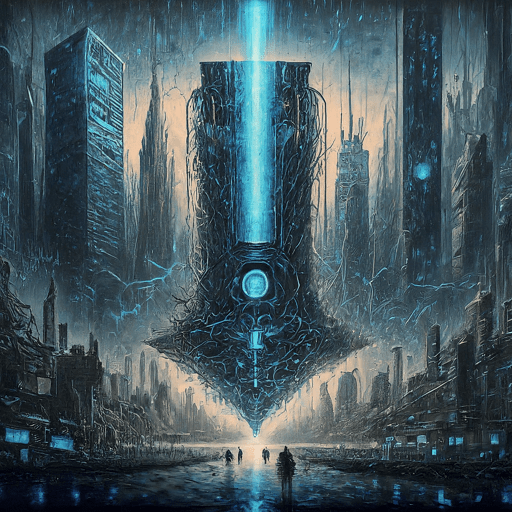

In today’s digitally driven world, the influence of algorithms on our everyday lives has become increasingly pronounced. From social media platforms to search engines and recommendation systems, algorithms shape the content we see and the information we consume. While these algorithms are designed to streamline user experience and personalize content, there is a growing concern about the extent to which they micromanage our thoughts and influence our decision-making processes. This paper delves into the ethical implications of algorithmic micromanagement, examining the ways in which algorithms impact our thoughts, behaviors, and ultimately, our autonomy.

Algorithms, at their core, are designed to process vast amounts of data and provide us with customized content based on our preferences, past behavior, and demographics. While this can enhance user experience in many ways, the concern arises when algorithms start to predict and influence our thoughts and actions with precision. By analyzing our online behavior and interactions, algorithms are able to create detailed profiles of individuals, allowing companies to target us with tailored advertisements and content. This raises questions about the extent to which our thoughts and choices are being manipulated by these algorithms.

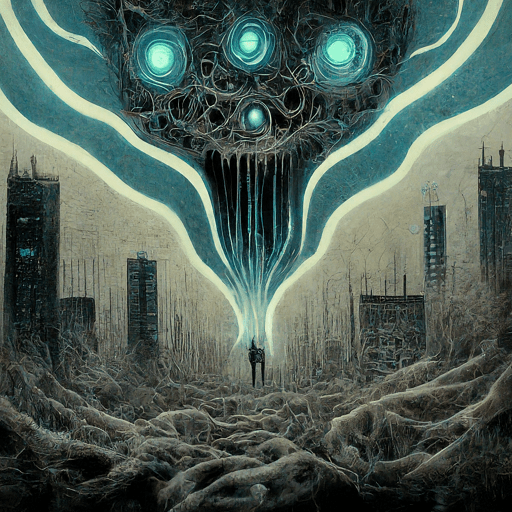

One of the key issues surrounding algorithmic micromanagement is the concept of filter bubbles and echo chambers. Algorithms are programmed to show us content that aligns with our existing beliefs and preferences, creating a feedback loop that reinforces our preconceived notions. This can lead to a distorted view of the world, as we are less likely to be exposed to diverse perspectives and alternative viewpoints. In essence, algorithms dictate not only what we see but also what we don’t see, shaping our thoughts and opinions in the process.

Furthermore, the use of algorithms for targeted advertising raises concerns about privacy and consent. Our online activities are constantly being monitored and analyzed, often without our explicit knowledge or consent. This data is then used to create personalized ads that are designed to influence our purchasing decisions. While some may argue that targeted advertising is simply a clever marketing strategy, others see it as a form of manipulation that infringes upon our autonomy.

In conclusion, the micromanagement of our thoughts by algorithms raises profound ethical questions about the impact of technology on our cognitive processes and decision-making abilities. As algorithms continue to evolve and become more sophisticated, it is crucial that we critically examine their influence on our lives and take steps to ensure that our autonomy and individuality are preserved in the digital age.

Act Like a Robot?

- Social media addiction: Algorithms prioritize content that evokes strong emotions (anger, outrage) to keep users engaged, creating a dopamine feedback loop that fuels addiction and displaces time for critical thinking.

- Search engine manipulation: Search engines can subtly alter search results to slightly favor certain viewpoints based on user data. For example, if you frequently search for news articles with a particular political slant, the search engine might prioritize similar articles in subsequent searches, creating the illusion of a consensus that may not exist.

Psychological Techniques:

Algorithms exploit well-known psychological biases to influence behavior:

- Scarcity: Limited-time offers or highlighting limited product availability create a sense of urgency and pressure to buy before it’s gone.

- Social proof: Seeing a high number of people purchasing a product (e.g., “500 people bought this in the last hour!”) triggers a desire to conform and make the same decision.

- Loss aversion: Highlighting the potential consequences of not taking action (e.g., “Don’t miss out on this amazing deal!”) emphasizes a fear of missing out, encouraging a purchase.

Long-Term Effects:

- Critical thinking erosion: Constant exposure to curated content and confirmation bias can weaken critical thinking skills. Users may become less likely to question information or seek out diverse perspectives.

- Creativity decline: Algorithmic suggestions and personalized feeds might limit exposure to new ideas and diverse content, potentially hindering creativity and innovation.

- Truth discernment difficulty: The prevalence of biased information and echo chambers can make it challenging to distinguish between fact and opinion, leading to difficulty forming informed decisions.

Exploring Solutions to Filter Bubbles

Algorithmic Bias:

Algorithms can perpetuate existing stereotypes and prejudices based on the data they are trained on. For instance, an algorithm trained primarily on news articles with a specific political slant might prioritize those viewpoints and downplay opposing perspectives. This can reinforce existing biases and hinder exposure to diverse viewpoints.

User Control: There will be None!

- Feed Diversification Options: Platforms could offer features allowing users to diversify their feeds by including content from outside their usual preferences or subscribing to topics that challenge their existing viewpoints.

- Algorithm Transparency & Control: Greater transparency regarding how algorithms work and the ability to adjust filtering settings would empower users to curate their own experiences.

Media Literacy:

Media literacy education in the digital age is crucial. Teaching users to:

- Critically evaluate information sources: This includes assessing the author’s credibility, identifying potential biases, and verifying information with reputable sources.

- Recognize manipulative techniques: Understanding how algorithms exploit psychological biases can help users make more conscious decisions about the information they consume.

- Seek out diverse perspectives: Actively searching for news sources and content that challenge existing beliefs can broaden one’s understanding of complex issues.

Body Paragraph 3: Privacy and Regulation

Data Collection Practices:

Companies often collect vast amounts of user data through various means, including browsing history, search queries, location data, and social media interactions. This data can be used for targeted advertising, sold to third parties, or even potentially used for social manipulation.

Regulation:

- Data protection laws: Regulations requiring companies to be transparent about data collection practices, obtain user consent, and provide options for data deletion can help protect user privacy.

- Limiting targeted advertising: Regulations may restrict the use of personal data for targeted advertising, potentially allowing users to opt out of such practices.

- Challenges and Benefits: Balancing the need for data-driven innovation with user privacy presents a challenge. Effective regulations need to be carefully crafted to protect privacy without hindering technological progress.

Individual Responsibility:

Protecting online privacy requires individual responsibility:

- Privacy-focused tools: Utilizing privacy-focused browsers, search engines, and social media settings can limit data collection.

- Mindful information sharing: Being cautious about what information is shared online can help minimize the data footprint.

- User agreements: Understanding user agreements before using online services can help users make informed decisions about data collection practices.

Additional Considerations:

- Counterarguments: Briefly acknowledge arguments downplaying algorithmic manipulation. For instance, some might posit that algorithms merely reflect user preferences. However, the way algorithms are designed and the data they are trained on can significantly influence those preferences. But Trust this! It has already gone beyond the point of No Return.

- Positive Impacts: While the focus is on negative aspects, acknowledge some positive impacts of algorithms, such as personalized learning platforms that cater to individual learning styles or recommendation systems that help users discover new content…Are there true Positive Points? People can be driven to Protest on College Campuses by Algorithms putting mind thoughts into their minds. Making them unable to control their own actions. And they’ll repeat stupid stuff when they are arrested.

You must be logged in to post a comment.