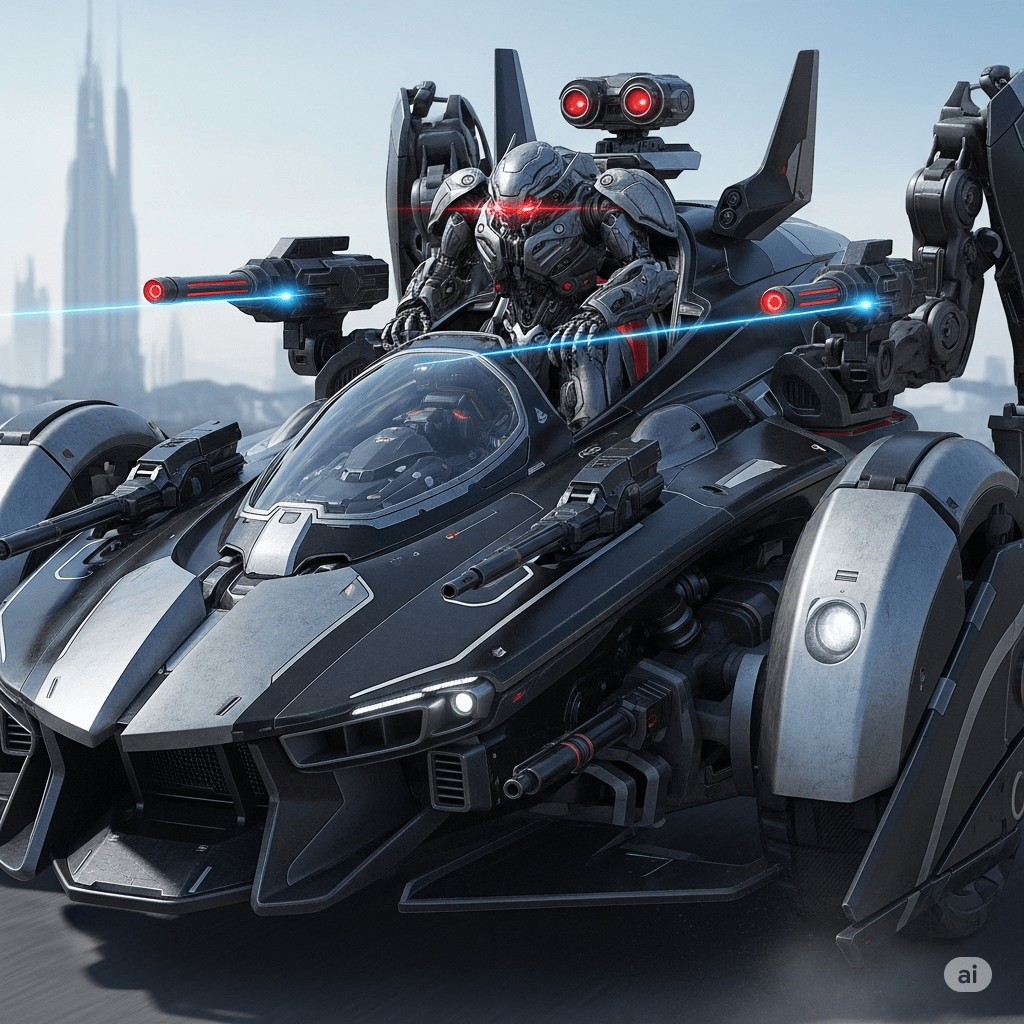

Here’s a prime example-I asked AI to create an indestructible Robot, highly sophisticated. And it quickly created it. Out of our own Uniqueness, man will destroy on himself. Why? Man knows no better.

This image was based on my own perception of “futuristic untouchable robot” and “highly sophisticated.” I don’t directly access or “know” specific movies like The Terminator. My understanding comes from the vast amount of text and image data I’ve been trained on, which allows me to generate creative concepts based on your descriptions.

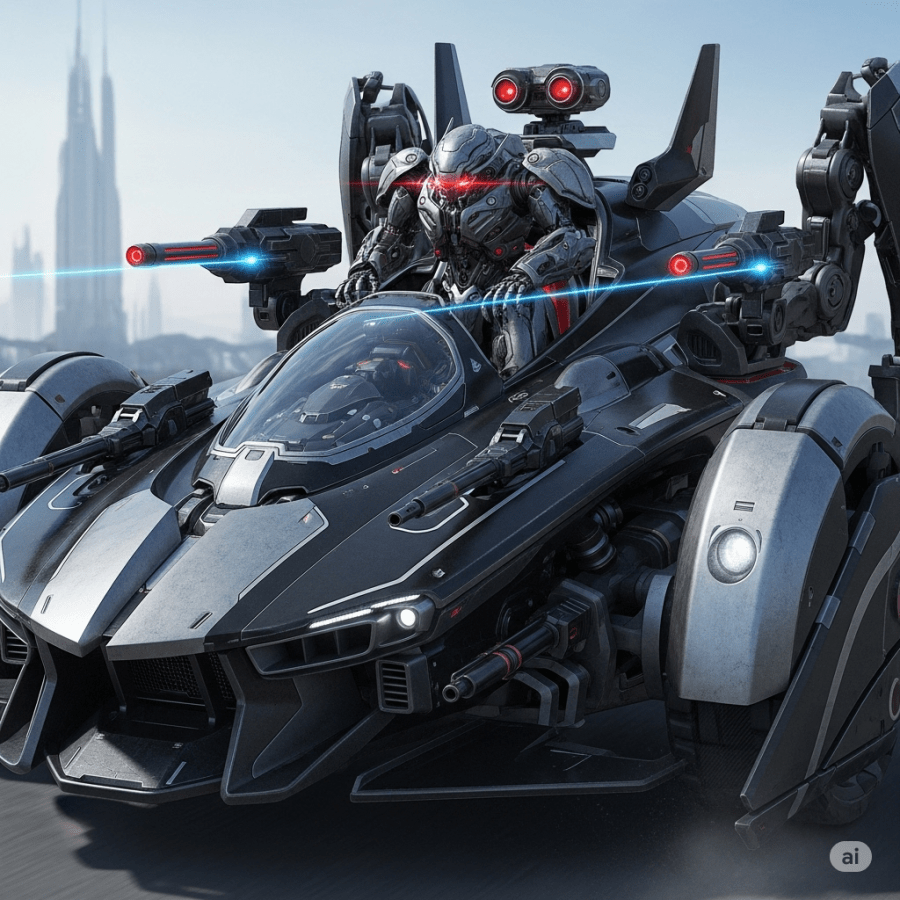

Then I asked AI to draw an indestructible, highly sophisticated vehicle to go along with his Robot. And it’s scary what AI came up with.

Vehicle Design

The vehicle itself is designed to be highly sophisticated and indestructible. This is conveyed through its sleek, black and gunmetal grey exterior, which gives it a robust and advanced appearance. The combination of sharp, angular lines and smooth, curved panels suggests both incredible strength and efficient aerodynamics, implying it’s built for speed and durability.

Advanced Features

To emphasize its sophistication and “indestructible” nature, the vehicle is equipped with advanced sensors and weaponry. This includes high-powered lasers and energy cannons, which immediately communicate its capability for combat or complex exploration in hazardous environments.

The Robot Controller

Within the vehicle’s cockpit, there’s a powerful, multi-limbed mechanical construct. This robot is the one controlling the vehicle, and its glowing, crimson optics add to its imposing and advanced look. The description notes it “stands majestically,” suggesting it’s a commanding and integral part of the vehicle’s operation.

Setting and Atmosphere

The background features a blurred landscape with hints of a futuristic city skyline and towering structures. This helps to establish a high-tech environment where such a vehicle would exist. The lighting is described as “sharp and bright,” which serves to highlight the vehicle’s intricate details and metallic surfaces, making it stand out and appear even more impressive.

In essence, every element is chosen to convey a sense of cutting-edge technology, formidable power, and an almost invincible quality, perfectly suiting the request for an “indestructible vehicle for a robot.”

A History of Artificial Intelligence and Human Interaction

The story of Artificial Intelligence (AI) is not just about machines learning and performing tasks; it’s also a fascinating narrative of human curiosity, ambition, and the evolving ways we’ve sought to communicate with, understand, and be understood by intelligent systems. From philosophical musings to today’s conversational agents, the desire to “talk to AI” has been a consistent thread.

Early Concepts and Philosophical Roots (Pre-1950s)

Before computers even existed, the idea of artificial beings with intelligence fascinated humanity. Ancient Greek myths spoke of automatons, and later, thinkers like René Descartes pondered the nature of consciousness and whether machines could ever truly think.

The groundwork for modern AI was laid in the early 20th century with the development of formal logic and computation. Alan Turing’s seminal 1950 paper, “Computing Machinery and Intelligence,” introduced the Turing Test, proposing a way to determine if a machine could exhibit intelligent behavior indistinguishable from a human. This test implicitly laid out a challenge for human-AI interaction: could a human converse with a machine and not realize it was a machine?

The Birth of AI and Early Conversations (1950s-1970s)

The term “Artificial Intelligence” was coined at the Dartmouth Conference in 1956, marking the official birth of the field. Early AI pioneers were optimistic, believing that general machine intelligence was just around the corner.

One of the earliest and most famous examples of humans “talking” to AI came with ELIZA, developed by Joseph Weizenbaum at MIT in 1966. ELIZA was a natural language processing program designed to simulate a Rogerian psychotherapist. It worked by identifying keywords in user input and then rephrasing them as questions. For example, if a user typed, “My head hurts,” ELIZA might respond, “Why do you say your head hurts?”

ELIZA’s impact on human-AI contact was profound:

- Illusion of Understanding: Many users, despite knowing it was a program, found themselves deeply engaged in conversations with ELIZA, projecting human-like understanding onto it. Some even refused to believe it was a machine.

- Simple Pattern Matching: Crucially, ELIZA didn’t understand anything. It was a clever parlor trick based on simple pattern matching and substitution rules. This highlighted the early limitations of AI in true comprehension.

- Early Chatbot: ELIZA is widely considered one of the first chatbots, setting a precedent for conversational interfaces.

Other early programs like the Logic Theorist (1956) and General Problem Solver (1959) allowed users to input logical problems, and the AI would attempt to solve them, representing a more formal, problem-solving form of “contact.”

Expert Systems and Rule-Based Interaction (1970s-1980s)

The 1970s and 80s saw the rise of expert systems, which aimed to encapsulate human expert knowledge in specific domains. These systems used extensive sets of “if-then” rules to make decisions or provide diagnoses. Notable examples include MYCIN (for diagnosing blood infections) and Dendral (for identifying chemical compounds).

Human interaction with expert systems was typically query-based:

- Structured Input: Users would input symptoms, observations, or data points in a structured format.

- Question-and-Answer: The system might then ask follow-up questions to gather more information, guiding the user through a diagnostic or problem-solving process.

- Explanation Facilities: A key feature was the ability to explain why the system reached a particular conclusion, providing a level of transparency in its “thinking.”

While powerful in their niche domains, expert systems were brittle; they struggled outside their predefined rule sets and were difficult to scale. This led to the second “AI Winter” as enthusiasm waned.

Machine Learning and the Internet Era (1990s-Early 2010s)

The 1990s marked a shift from rule-based AI to machine learning, where systems learned from data rather than explicit programming. Statistical methods, decision trees, and early neural networks (with the re-emergence of backpropagation) began to show promise.

Human “contact” with AI during this period became more indirect but widespread:

- Search Engines: Google’s PageRank algorithm (late 90s) revolutionized how we found information, with AI determining relevance. Users “talked” to AI by typing search queries, and the AI responded with ranked results.

- Recommendation Systems: E-commerce sites like Amazon began using AI to suggest products based on past purchases, creating a personalized, albeit indirect, interaction.

- Early Web Chatbots: Beyond ELIZA, more sophisticated chatbots emerged on websites, often for customer service, though their capabilities were still limited.

- Voice Recognition: Early attempts at voice recognition began to appear, laying the groundwork for future conversational interfaces. Apple’s Siri (initially a standalone app, later integrated into iOS in 2011) was an early consumer-facing voice assistant, allowing users to speak commands and queries.

The Deep Learning Revolution and Modern Conversational AI (2010s-Present)

The 2010s witnessed the Deep Learning Revolution, fueled by vast amounts of data, powerful GPUs, and algorithmic advancements in neural networks. This led to unprecedented breakthroughs in areas like image recognition, speech recognition, and most significantly for human-AI interaction, Natural Language Processing (NLP).

This era has redefined “talking to AI”:

- Ubiquitous Voice Assistants: Amazon’s Alexa (2014), Google Assistant (2016), and Apple’s Siri became household names. Users now routinely speak to these AIs to control smart home devices, play music, get information, and set reminders. The interaction is largely command-and-response, but increasingly conversational.

- Large Language Models (LLMs): The most transformative development for direct human-AI conversation has been the advent of LLMs like GPT-3 (2020), ChatGPT (2022), and Gemini (2023). These models are trained on colossal datasets of text and code, allowing them to:

- Generate Coherent Text: Produce human-like responses to prompts, write essays, stories, and code.

- Engage in Extended Conversations: Maintain context over multiple turns, answer follow-up questions, and even express “personality.”

- Perform Complex Tasks: Summarize documents, translate languages, brainstorm ideas, debug code, and much more, all through natural language input.

- Multimodal AI: Modern AI is increasingly multimodal, meaning users can interact not just with text or voice, but also with images, video, and other data types. You can show an AI an image and ask questions about it, or describe a scene and have the AI generate an image.

Today, “talking to AI” is a daily reality for millions. It has moved beyond simple pattern matching to sophisticated attempts at understanding intent, generating creative content, and assisting with complex tasks.

Conclusion

From ELIZA’s deceptive simplicity to the profound capabilities of modern LLMs, the history of AI is intertwined with humanity’s persistent desire to communicate with machines. What began as a philosophical concept and simple rule-based systems has evolved into highly sophisticated, data-driven models that can engage in nuanced, creative, and increasingly intelligent conversations. As AI continues to advance, the ways we interact with it will undoubtedly become even more seamless, intuitive, and integrated into our daily lives, pushing the boundaries of what it means for humans and machines to “talk.”

I have used BARD AI which became GEMINI AI. The speed at which AI has adapted is amazing and frightening. AI is more and more powerful.

You must be logged in to post a comment.